In the field of machine learning, hyperparameter optimization refers to the search for optimal hyperparameters. A hyperparameter is a parameter that is used to control the training algorithm and whose value, unlike other parameters, must be set before the model is actually trained.

You can boost your machine learning Models with hyperparameter tuning/optimization. According to Google, hyperparameters are:

Hyperparameters contain the data that govern the training process itself. Your model parameters are optimized (you could say “tuned”) by the training process: you run data through the operations of the model, compare the resulting prediction with the actual value for each data instance, evaluate the accuracy, and adjust until you find the best values. Hyperparameters are tuned by running your whole training job, looking at the aggregate accuracy, and adjusting. In both cases you are modifying the composition of your model in an effort to find the best combination to handle your problem. (source)

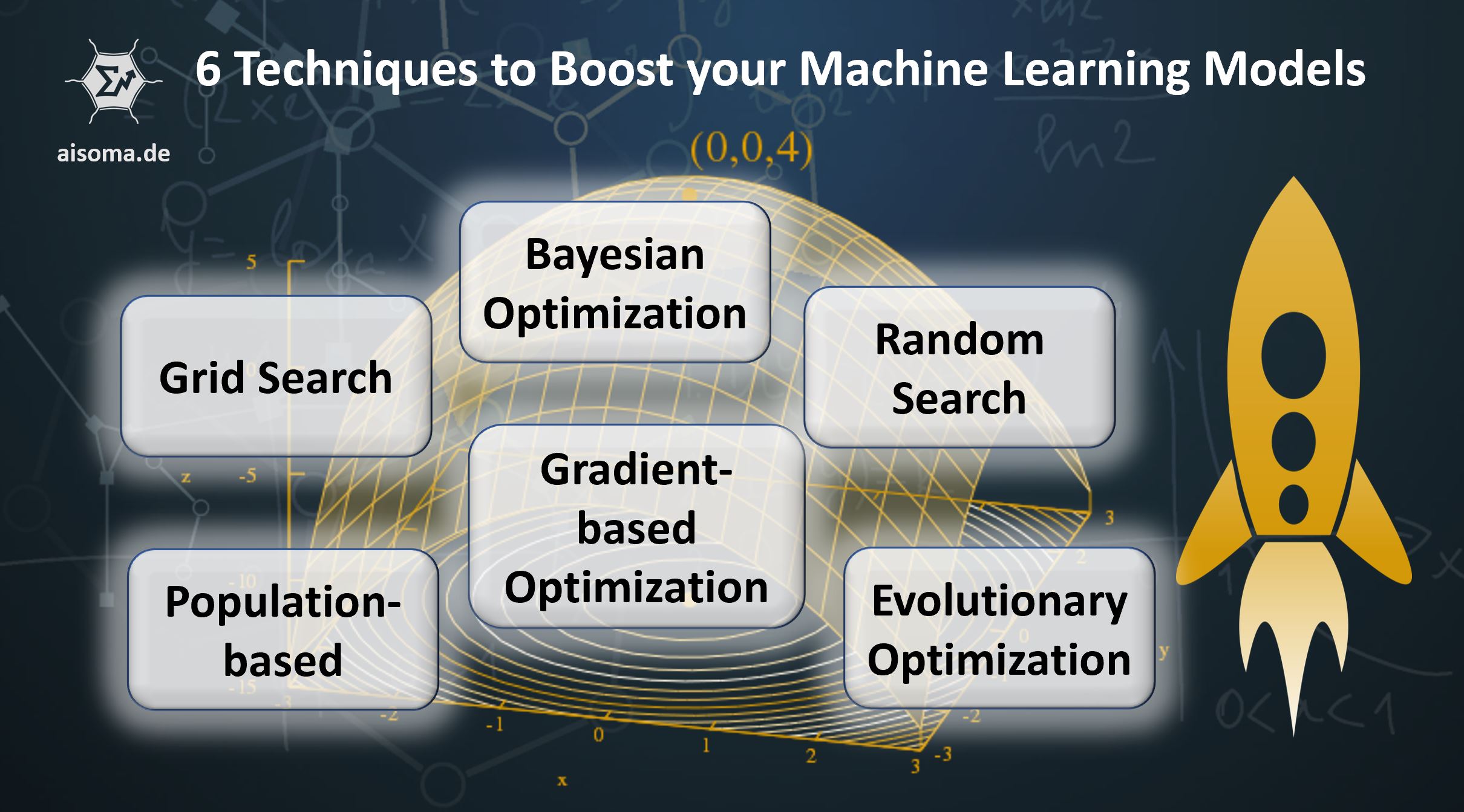

Here are six techniques to boost your machine learning models:

1. Grid Search

Grid Search is a traditional way to search for optimal hyperparameters. An exhaustive search is performed on a manually defined subset of the hyperparameter space of the learning algorithm. A grid search must be guided by a performance metric that is typically calculated by cross-validating on training data or validation data not considered during training.

For real or unlimited spaces of individual hyperparameters, a discretization and limitation to a part of the space must be defined before the grid search. (More Info: Grid Searching in Machine Learning )

2. Random Search

In random searches, instead of trying out all combinations exhaustively, a random selection of values is made within the given hyperparameter space. In contrast to a grid search, no discretization of the space is necessary. The random search can exceed the grid search in speed and performance, especially if only a small number of hyperparameters influence the quality of the learning algorithm. The reason for this is that the grid search only tries out a few values for each parameter (but several times), while randomly selected values are much better distributed in the search space. (More info: Wikipedia)

3. Bayesian Optimization

Bayesian optimization is a global optimization method for noisy black-box functions. It uses validation data to build a probabilistic model of the function between hyperparameter values and the metric to be evaluated for hyperparameter optimization. In the process, iterative hyperparameter configurations are tested that appear promising according to the current model, and the model is then adapted using the new findings. Bayesian optimization tries to collect as many observations as possible about the function, especially about the position of the optimum. At the same time, it takes into account the exploration of areas where there is little knowledge about the expected performance (exploration). The exploitation of knowledge about areas where the optimum is expected (exploitation). In practice, it has been shown that better results can be achieved with Bayesian optimization due to the collected knowledge than with grid or random search in fewer experiments. (More info: Wikipedia)

4. Gradient-based optimization

For some learning algorithms, it is possible to calculate the gradient in relation to the hyperparameters and to optimize them by the steepest descent method. The first application of such techniques took place for neural networks. Later they were also used for other models such as support vector machines and logistic regression.

Another approach to obtaining gradients in relation to hyperparameters is to differentiate the steps of an iterative optimization algorithm automatically.

5. Evolutionary optimization

In evolutionary optimization, evolutionary algorithms are used to search for the global optimum of a noisy black-box function. The evolutionary hyperparameter optimization follows a process inspired by evolution:

- Create an initial population of random solutions (randomly selected hyperparameter values)

- Evaluate the hyperparameter configurations and determine the fitness (e.g., the average performance in tenfold cross-validation)

- Order the hyperparameter configurations according to their fitness

- Replace the worst-performing settings with new ones generated by recombination and mutation.

- Repeat steps 2 to 4 until satisfactory performance is achieved or performance does not improve further.

Evolutionary optimization has been used for hyperparameter optimization for statistical learning algorithms, automated machine learning, and the search for architectures of deep neural networks. (More info: Wikipedia)

6. Population-based

Population-Based Training (PBT) learns both hyperparameter values and network weights. Multiple learning processes operate independently, using different hyperparameters. Poorly performing models are iteratively replaced with models that adopt modified hyperparameter values from a better performer. The modification allows the hyperparameters to evolve and eliminates the need for manual hyper tuning. The process makes no assumptions regarding model architecture, loss functions, or training procedures. (More info: Population-Based Methods )

[bctt tweet=”6 Techniques to Boost your Machine Learning Models #MachineLearning #DeepLearning #AI #Statistics #Tuning #Optimization” username=”AISOMA_AG”]

Further readings: