Large language models like GPT-5 and Claude have captured the spotlight. However, a quiet revolution is underway with the emergence of small language models (SLMs). These are models with significantly fewer parameters than their larger counterparts, typically ranging from a few million to a few billion. While they may not have the raw power or breadth of knowledge of LLMs, SLMs are poised to become the future of AI for several compelling reasons.

The rise of Small Language Models shows us that in AI, it’s not size that defines intelligence, but precision, efficiency, and purpose.

Content

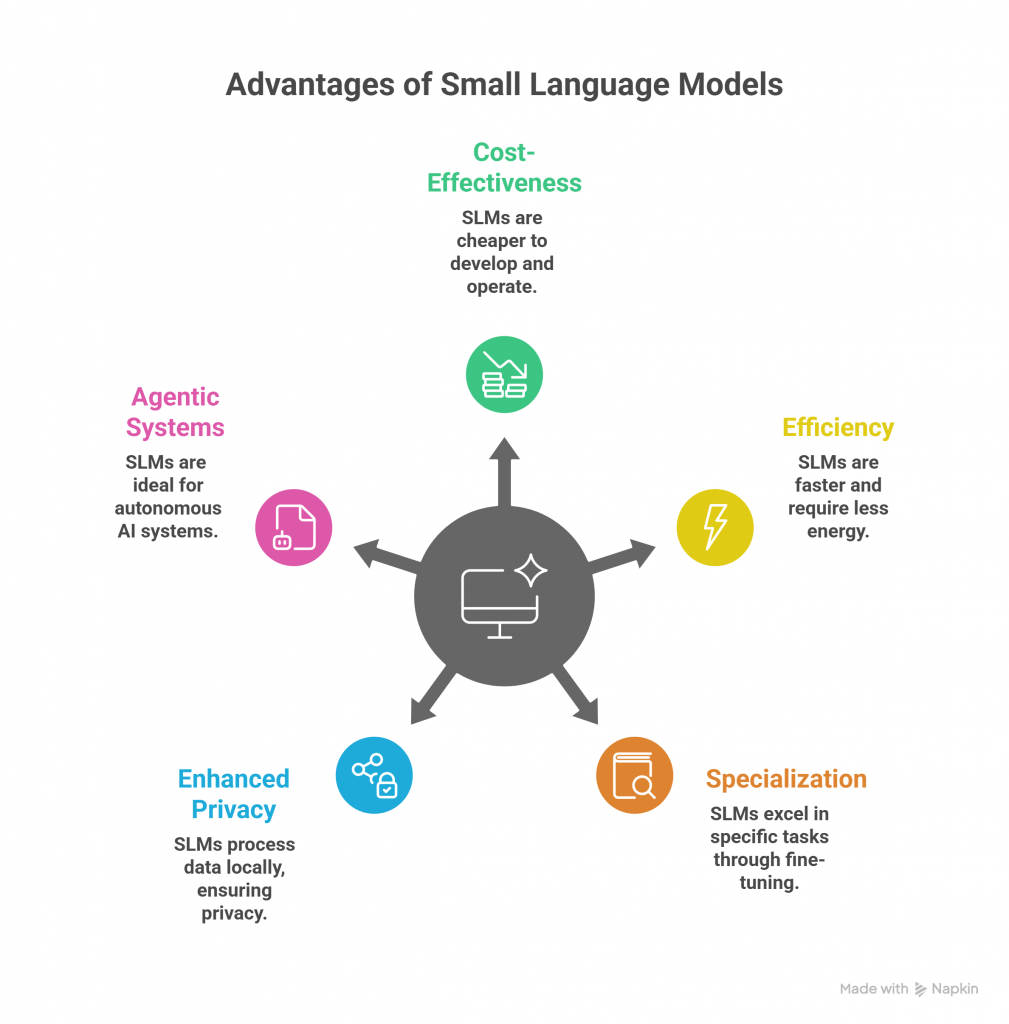

The Advantages of Small Language Models

SLMs are not just smaller versions of LLMs. They represent a fundamental shift in how we think about and deploy AI. Their key advantages are:

- Cost-Effectiveness: Training and running LLMs require immense computational resources, leading to high costs. SLMs, on the other hand, are much cheaper to develop and operate, making them accessible to a broader range of businesses and developers.

- Efficiency: Due to their smaller size, SLMs are faster and require less energy to run. This makes them ideal for applications on edge devices, such as smartphones, smart speakers, and other IoT devices, where low latency and power consumption are critical.

- Specialization and Fine-Tuning: While LLMs are general-purpose, SLMs can be fine-tuned on specific datasets to excel at a particular task. This specialization leads to highly accurate and domain-specific performance, often surpassing general-purpose LLMs in their niche. For example, an SLM trained on medical data can be a powerful tool for diagnostic assistance.

- Enhanced Privacy and Security: Since SLMs can run locally on a device, sensitive data doesn’t need to be sent to a cloud server for processing. This on-device processing significantly enhances data privacy and security.

- Key to agentic and modular AI systems: In agentic architectures — systems acting autonomously on behalf of users — SLMs often outperform LLMs in terms of cost, flexibility, and specialization. According to recent research, capability now matters more than parameter count, making SLMs highly suitable for scalable, efficient agentic workflows.

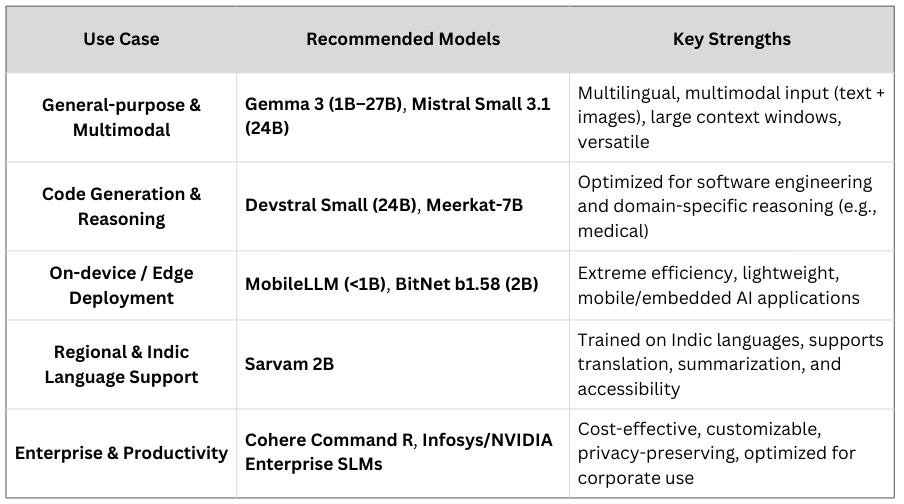

Promising SLMs for Different Use Cases

Here are some standout small language models making an impact:

1. General-purpose & multimodal

- Gemma 3 (1B–27B) by Google DeepMind: Supports text and image input, huge context windows (up to 131,072 tokens), multimodal and multilingual, and has variants like MedGemma (medical), CodeGemma (coding), and DolphinGemma (dolphin communication studies).

Link: https://ai.google.dev/gemma/docs/core?hl=de - Mistral 7B & Mistral Small 3.1 (24B):

- Mistral 7B competes with much larger models on benchmarks.

Link: https://mistral.ai/news/announcing-mistral-7b - Mistral Small 3.1, released March 2025, adds image/document understanding and supports long context — deployable on a single RTX 4090 or Mac with 32 GB RAM.

Link: https://mistral.ai/news/mistral-small-3-1

- Mistral 7B competes with much larger models on benchmarks.

2. Coding & reasoning

- Devstral Small (24B): Optimized for software engineering and coding, performs well in development workloads — open-source via Apache 2.0 license.

Link: https://mistral.ai/news/devstral - Meerkat‑7B (medical): Trained with chain-of-thought data from medical textbooks. It surpassed the USMLE passing threshold, narrowing the performance gap with GPT-4 in complex clinical tasks.

Link: https://huggingface.co/dmis-lab/meerkat-7b-v1.0

3. On-device and extreme efficiency

- MobileLLM family (<1B parameters): Architected specifically for mobile deployment with innovations like grouped-query attention. Comparable accuracy to LLaMA-v2 7B in some API tasks.

Link: https://arxiv.org/abs/2402.14905 - BitNet b1.58 (2B parameters, 1.58-bit quantization): Delivers performance matching 16-bit models at much smaller memory footprint, thanks to novel low-bit internal design.

Link: https://huggingface.co/microsoft/bitnet-b1.58-2B-4T

4. Regional and Indic language support

- Sarvam 2B: India’s first open-source foundational model, optimized for Indic languages — trained on 4 trillion tokens for tasks like translation and summarization.

Link: https://huggingface.co/rachittshah/sarvam-2b-v0.5-GGUF

5. Enterprise platforms & tools

- Cohere’s Command R: Smaller model fine-tuned for summarization and analysis — more accurate and cost-efficient than GPT‑4 for meetings, financial/scientific content.

Link: https://docs.cohere.com/docs/command-r - Infosys & NVIDIA jointly developed small enterprise SLMs: Built for domain-specific workflows, helping companies reclaim control over their AI capabilities.

Link: https://www.infosys.com/newsroom/press-releases/2024/launch-small-language-models.html

At a Glance

SLMs are the Future

SLMs are not just an alternative. They are becoming the backbone of efficient, scalable, and trustworthy AI systems. Their benefits:

efficiency,

specialization,

cost-effectiveness,

and privacy,

make them ideal for modular and hybrid AI ecosystems. Enterprises are already embracing this shift, building tailored models, and platforms are enabling rapid deployment for real-world needs.

AISOMA

Contact: info@aisoma.de